Mobile Systems | Machine Learning | Android App | CNN | Spectrograms

This project was completed as part of the Mobile and Distributed Systems module at SMU. The objective was to train two models - one to recognize voice and the other to recognize gesture and correctly classify users, granting them access to the phone via an android app.

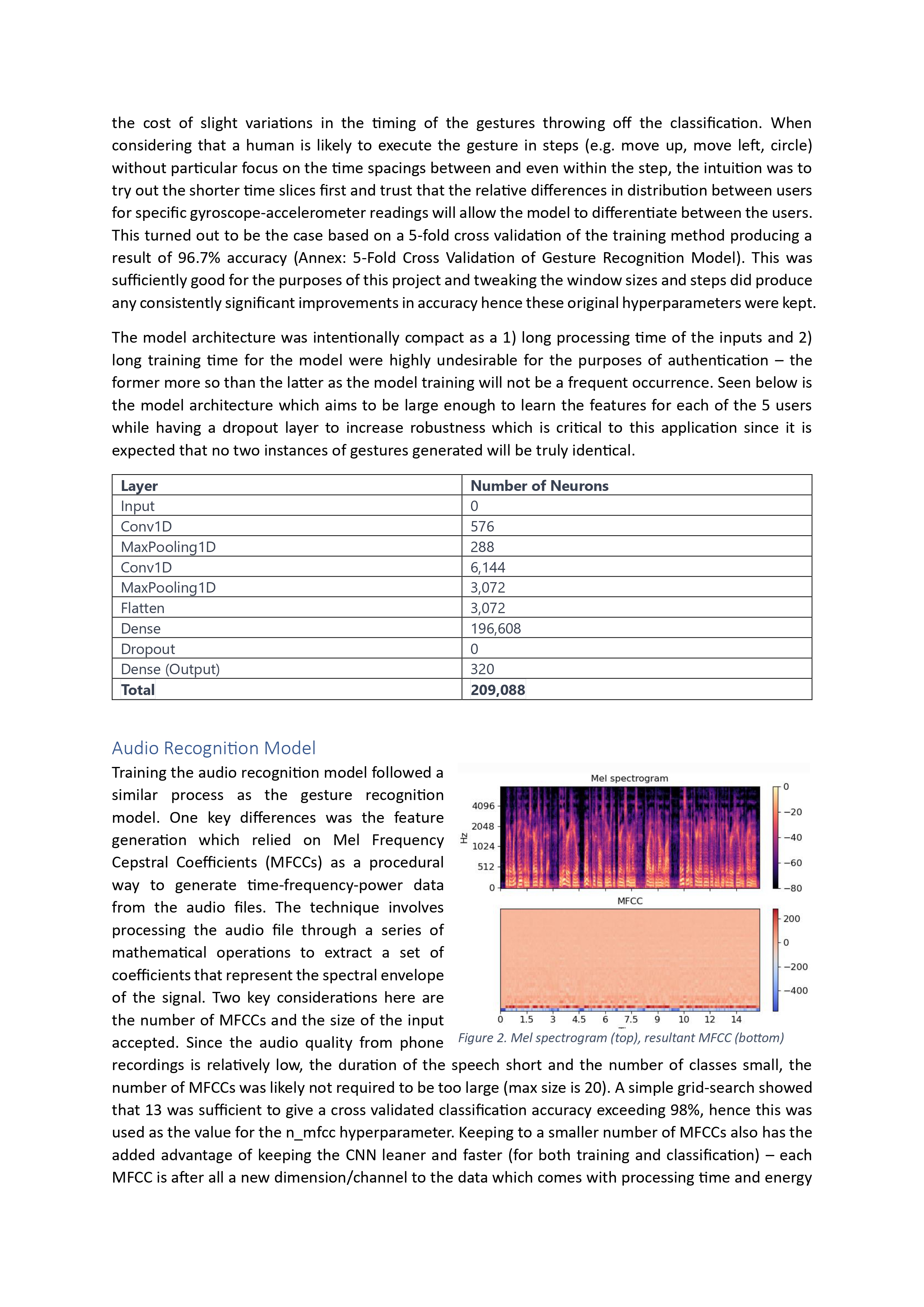

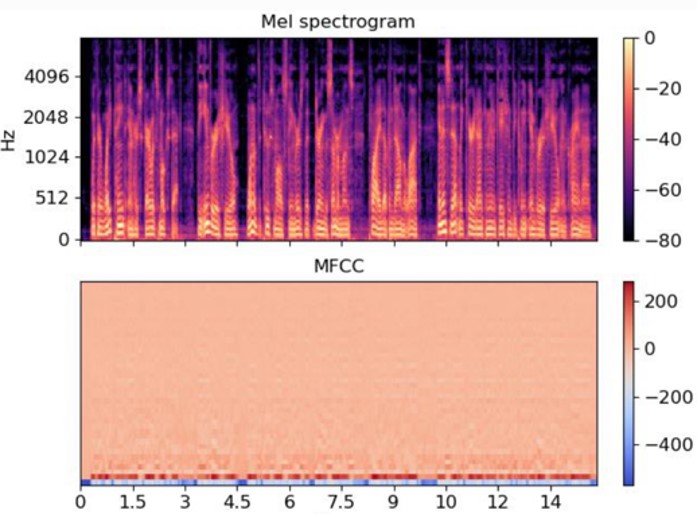

The training data for each user is a snapshot of their voice recording, taken at a fixed interval, the exact same approach was used for the gesture recognition. This seemed to have worked well despite the clear issue of the removal of the time-dependence between data. I tried to connect adjacent timeframes by letting each frame overlap by a factor of 0.5 (e.g. half-the recordings are shared between two datapoints).

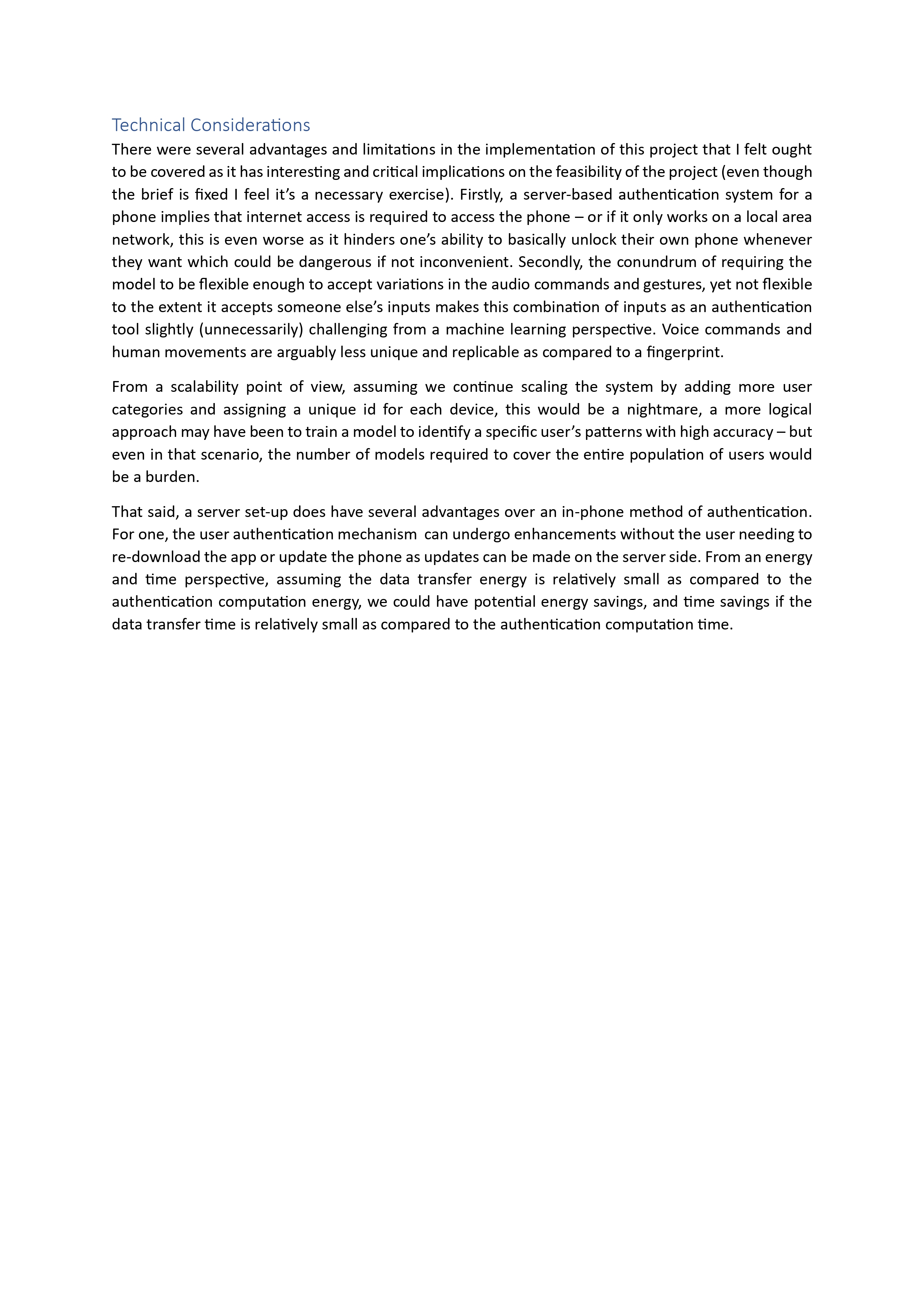

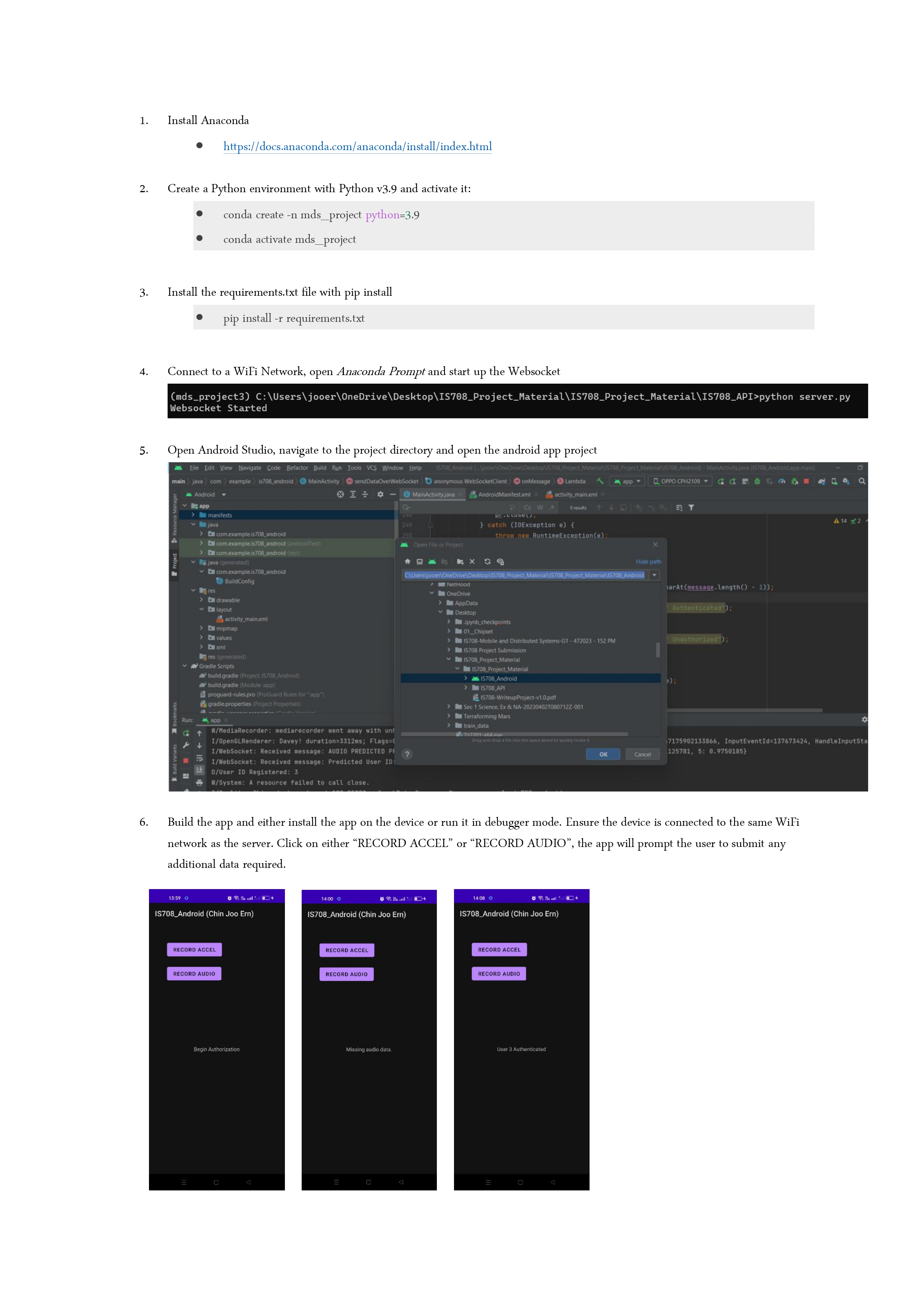

The README and design report explaining the project is as seen below. This was an individual project and the focus was on the mobility of the system (mobile phone connected to a computer via WiFi and websockets). Data was collected on the phone and transferred to my laptop which contains the ML model, the prediction is then fed back to the phone, giving the feedback on whether a user is allowed to access the phone.

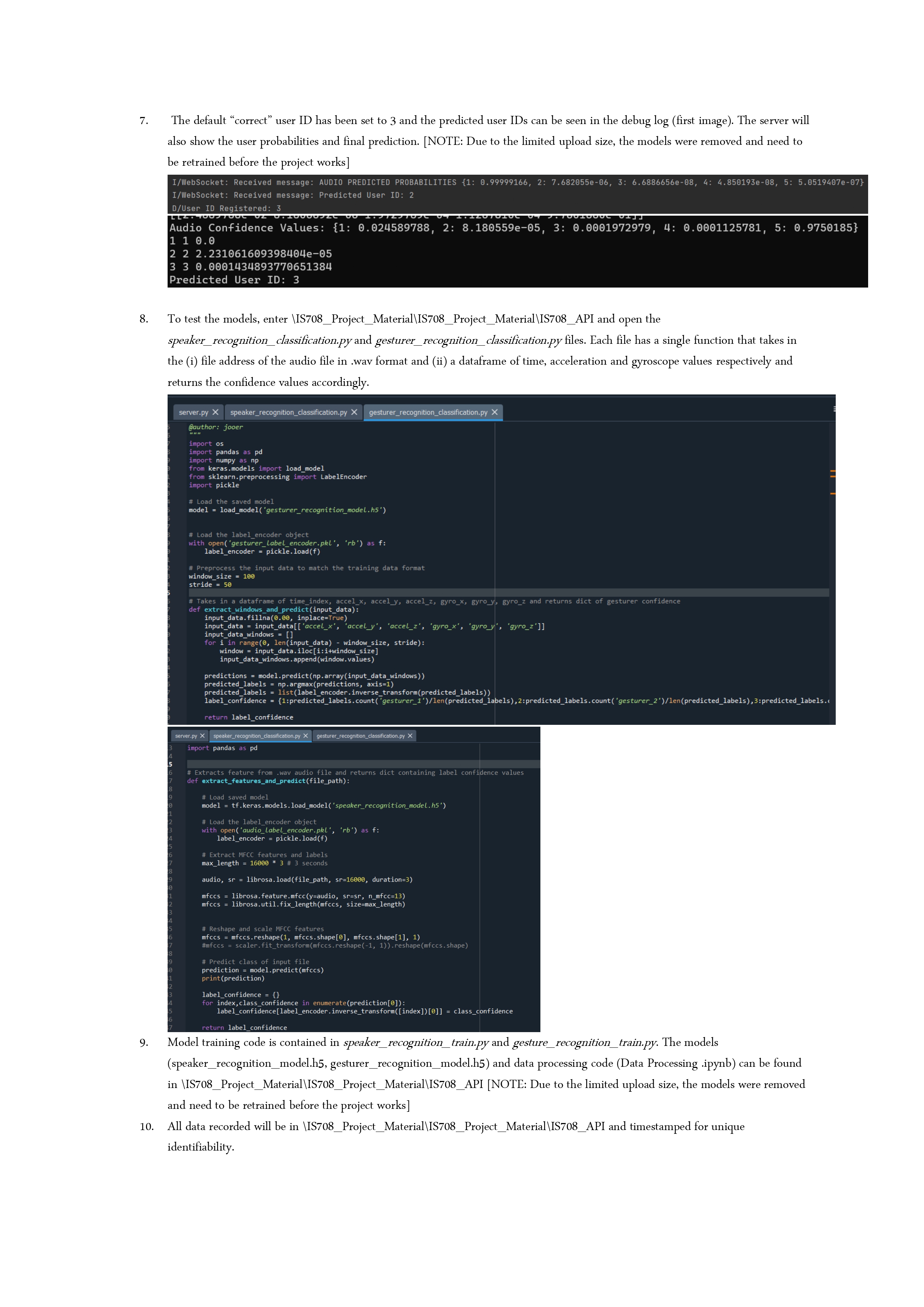

The page will be split into 2 main segments: